In one of the foundational concepts of Buddhist philosophy, the Five Aggregates, we unsurprisingly (at least to me) find counterparts in the world of machine learning (ML) and artificial intelligence (AI). Genuine AI is currently beyond the large language models (LLM – ex. ChatGPT, Grok, Gemini) that have become very familiar to us over the past year. However, LLM are one of the Five Aggregates. The ML versions of the other four aggregates touches upon other aspects of ML that the general public has lived with for decades.

In this short blog, I lay out each of the Five Aggregates correlated to major components of ML, in the context of what pondering an intelligence of a business might be.

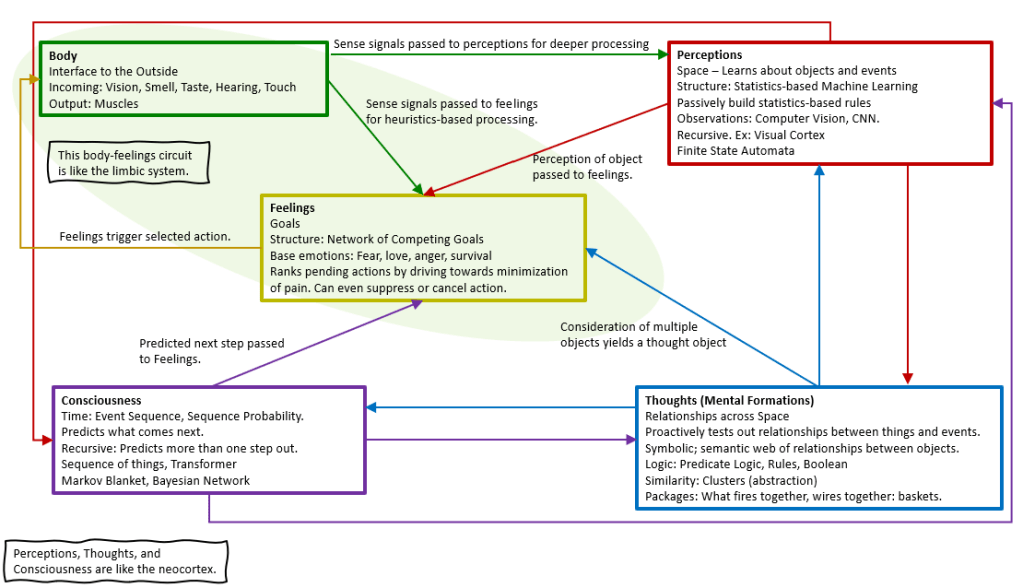

The Body: Sensors and Senses

At the forefront of this exploration is the body, traditionally viewed as the physical vessel of existence. In a technological context, the body’s sensory apparatus mirrors the functionality of sensors, gathering data from the external environment. These sensors, akin to our body’s senses (vision, hearing, smell, taste, touch, and the many others that go unrecognized) are not mere passive receivers but are equipped with edge computing capabilities, simple minds of their own, processing information at the source. This parallel extends to the immune cells, which act as internal sensors, vigilantly monitoring and responding to changes within the body. This analogy underscores a system where each component, though autonomous, plays a critical role in the collective experience of being.

Feelings: The Guiding Compass of KPIs

Feelings, in Buddhist thought, are the affective responses to sensory experiences, guiding beings toward pleasure and away from pain. When viewed through the prism of modern technology, feelings resemble the top-level objectives or Key Performance Indicators (KPIs) of a system. Just as feelings influence our decisions by coloring our perceptions and motivations, KPIs drive the performance and direction of business systems, marking the path toward desired outcomes and steering them clear of undesirable states.

Curiosity isn’t usually thought of as a feeling. But it’s one of the most crucial for sentience. It drives us to be proactive. In a sense, we have a mechanism for making problems for ourselves.

Perceptions: The Machine Learning Models of Experience

Perception, the interpretative layer of experience, closely aligns with the predictions made by machine learning (ML) models. These models, through supervised learning, classify and interpret data based on prior experiences or preprogrammed criteria, much like how humans perceive and make sense of their surroundings. This comparison not only highlights the predictive nature of perception but also its foundational role in shaping our understanding of the world, mirroring the way machine learning models extrapolate from known data to make informed predictions.

The vast majority of ML models are based on statistics. Non-sentient consciousness is based on and driven by the statistical nature of evolution. Indeed, all that we sense is the average result of the massive number of microscopic interactions. Evolution could be thought in this way: What has usually worked is probably will be the best choice.

I’ve been very fond of Non-Deterministic Finite Automata (NFA) since Micron first developed the (now defunct) Automata Processor (ca. 2012-2013). NFAs are powerful for real-time detection, acting as rule-based structures that validate sequences as they unfold. Within Perception, NFAs can play a pivotal role in integrating the Five ML Aggregates, serving as a unifying layer that detects patterns and transitions dynamically.

This integration resembles how a shadow captures the ever-changing state of objects illuminated by light—a snapshot of the real-time interactions between perception, rules, and sensory data. NFAs in perception enhance the system’s ability to not only interpret but also act immediately on recognized patterns, bridging deterministic rules with the probabilistic underpinnings of machine learning.

Thoughts: The Creative Web of Association and Clustering

Thoughts, the mental formations arising from and influencing our perceptions and feelings, find their technological counterpart in the processes of association and clustering within unsupervised learning. This dimension of thought explores the parallel exploration of felt experiences and perceived predictions, organizing information into coherent patterns without explicit guidance. Such unsupervised learning processes, akin to human creativity, underscore the capacity for thoughts to form, dissolve, and reorganize, leading to novel insights and understandings.

The output of thoughts are thoughts. Thoughts are more than functions that spit out some answer like “George Washington” or “42”. A thought is a system of relationships, a knowledge graph. Thoughts can link to other thoughts creating yet more thoughts.

In the world of AI, knowledge graphs and Prolog are two prime examples of encoded thoughts.

Consciousness: The CPU of Experience

Lastly, consciousness – the aggregate responsible for the serial processing of experiences and decision-making – is likened to a complex, now familiar LLM. This singular, integrative function of consciousness, capable of navigating through the myriad inputs of thoughts and perceptions to decide on a course of action, mirrors the sophisticated decision-making processes of advanced AI systems. It highlights the serial nature of consciousness, orchestrating a cohesive response from the parallel streams of sensory inputs, feelings, perceptions, and thoughts.

While what most consider to be “consciousness” doesn’t equate to LLMs, it’s not the structure but the process. An LLM and a brain is a thing. It’s the interplay with the world, the process, that is what we’re aware of as our consciousness.

Our human intelligence is a system within a system at scale. Meaning, it’s not one or five things, but simultaneous tension between the relationships of things, each scaled to large numbers. That’s immense numbers of cells, magnitudes more relationships between cells (mostly synapses), and Jupiter-sized dust-storms of experiences with very many other things.

The LLMs we know today are scaled out to billions of parameters and hundreds of trillions of bytes of data. But the other parts (sensors, supervised and unsupervised ML models) are still subject to reductionist thinking that gets us all in a lot of trouble. Reductionist thinking gives us the illusion that we know what’s going on. It’s that very flawed assumption that if we understand the parts, the whole will follow.

This exploration into the parallels between the Five Aggregates of Buddhism and ML/AI not only offers a different perspective on ancient philosophical concepts but also sheds light on the complexities intelligence, sometimes glossed over in the current AI hype. My goal for this blog was just to point out that sentience or at least true intelligence is a complex system, not a set of things – no matter how intricate those things might be.

Faith and Patience,

Reverend Dukkha Hanamoku